In today’s digital landscape, users have limited tolerance for sluggish websites and mobile apps. Even a slight delay in loading can result in disgruntled users, higher bounce rates, and missed opportunities. This is why optimizing your app’s speed is crucial—it’s all about fine-tuning your application to deliver a fast and smooth user experience. In this article, we will delve into various methods, tactics, and recommended guidelines to make sure your app excels in speed, performance, and user contentment.

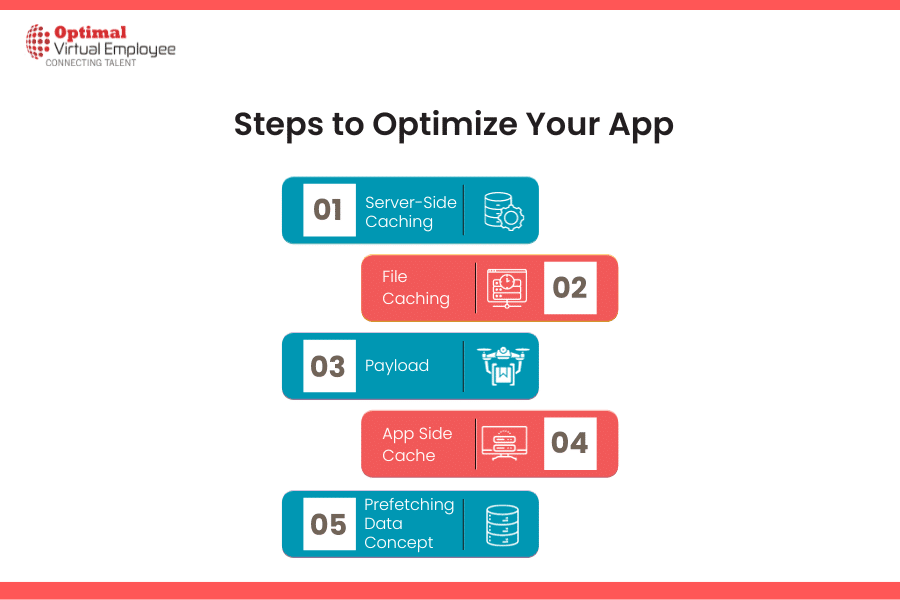

Let’s start from the server side.

Server-Side Caching Explained:

Server-side caching is the practice of temporarily storing frequently requested data either in the server’s memory or on its storage. When a user seeks specific content, the server can swiftly deliver this cached data rather than recreating it anew. The outcome is a lighter server load and much quicker response times. Among various types of server-side caching, this article will focus on File Caching.

Understanding File Caching for REST APIs:

In the context of REST APIs, file caching involves saving the responses to API calls as cached files on the server. When a client issues a request, the server can promptly serve the cached data, bypassing the need to re-run the entire request and processing logic. This results in both lower server load and faster API response times.

Why File Caching for REST APIs is Important:

Quicker Responses: Using cached data allows the API to deliver nearly immediate answers to client requests, minimizing waiting periods.

Lighter Server Load: Caching lessens the server’s workload by reducing the necessity for repeated computations, database interactions, or intricate processing.

Bandwidth Efficiency: Caching decreases the amount of data transferred between the server and clients, thereby conserving bandwidth.

Improved Scalability: File caching boosts the API’s ability to scale, as the server can handle more requests without requiring extra resources.

Consistent Performance: Caching helps maintain steady response times, even during instances of high traffic or increased demand.

By understanding and implementing file caching, you can significantly enhance your API’s performance and reliability.

How to sync updated data with cache data.

ETag Explained:

An ETag is a unique string token that a server attaches to a response to specifically identify its current state.

Should the content at a given URL change, a new ETag will be generated. By comparing ETags, it’s possible to determine whether two versions of a response are identical. During a request, the app includes the ETag in the request header, which the server then checks against the ETag of the requested content. If they match, the server returns a 304 Not Modified status with no body, indicating to the app that the cached data remains valid.

The statistics above show the impact on response times for our Home Page API when using this caching method.

Understanding Image Caching:

Image caching involves storing images in cache memory to enhance an application’s performance. The next time the app needs the image, it first checks the cache. If the image is available there, the app will load it from the cache instead of fetching it anew.

Why Image Caching Matters:

Faster Load Times: By caching images, the app reduces the time needed to load, providing users with quicker access to product images or content.

Elevated User Experience: Speedier loading times contribute to a better overall user experience.

Mobile Performance Boost: Caching images results in improved app performance as it reduces image load time.

By leveraging ETags and Image Caching, you can significantly improve both the performance and user experience of your application.

Payload :

Payloads in Networking: Understanding Their Impact on Speed

A payload refers to the portion of a network packet that actually carries the data. Payloads can be either singular or multiple in nature, and both types offer distinct advantages for optimizing speed based on your application’s specific needs.

Advantages of Using Single Payloads:

Lower Overhead: Utilizing a single payload can reduce the extra effort associated with handling multiple messages or requests, making communication between system components more efficient. This is particularly beneficial in scenarios requiring frequent data exchanges.

Reduced Network Latency: When network lag is an issue, using a single payload can minimize the number of round-trips between the client and the server, thereby accelerating data transfer.

Advantages of Batch Processing with Single Payload:

Efficiency in Processing: Aggregating multiple records or operations into a single payload can make batch processing more efficient, as the server can deal with multiple items in one go.

Perks of Multiple Payloads:

Parallel Processing: Multiple payloads make it possible for various parts of a system to process data concurrently. This can increase data throughput and reduce processing time.

Benefits for Real-Time Data:

Continuous Data Flow: For real-time or streaming data, multiple payloads can facilitate an uninterrupted flow of information, leading to timely updates and a more responsive system.

Why Scalability Matters:

Load Balancing: Distributing data across multiple payloads allows for effective load balancing and enhanced scalability, as different components can work on separate payloads in parallel.

Optimized Data Retrieval:

Fetching What’s Needed: When retrieving data from a server, you can use multiple payloads to request only the required data segments, thereby cutting down on data transfer and improving response times.

By carefully choosing between single and multiple payloads based on your application’s needs, you can significantly enhance both speed and efficiency.

App Side Cache:

Incorporating app-side caching involves locally storing and handling data that are accessed frequently, a strategy that can substantially boost the speed and responsiveness of the application. The choice of database for this can be quite flexible, and tailored to the specific needs of the project. For instance, in our Opencart App, which is built on Flutter technology, we utilize Hive to store API responses locally. If you’re interested in learning more about how to use Hive for app-side caching, along with examples, please follow the link below:

“API Caching with Hive and Bloc”

Prefetching Data Concept:

Up to this point, through the strategies and methods we’ve discussed, we’ve significantly optimized our app’s speed. By implementing Prefetching Cache, we have the opportunity to further enhance the application’s performance.

What Is Prefetching?

Prefetching involves preloading data on a background thread before it’s actually needed and storing it locally. This way, when we need to access the data, we don’t have to wait for it to load from the server; instead, we can fetch updated data asynchronously in the background.

For instance, in our demos, we preloaded the product details that appear on the homepage. The advantage here is that the product detail screen is essentially just a tap away. As soon as a user taps on a product tile, the detail screen displays the data instantly.

Conclusion

First and foremost, investing in a reliable hosting service is crucial for the overall performance and reliability of your OpenCart website. A dependable host not only ensures minimal downtime but also provides the necessary infrastructure to handle traffic spikes, thereby contributing to a smoother user experience. Additionally, the quality of the theme and extensions you choose can significantly impact both the aesthetics and functionality of your site. Opting for high-quality, well-designed themes and extensions will further enhance the user interface and can also positively affect site speed.

Secondly, implementing a robust caching solution is imperative for elevating the overall user experience and performance of your OpenCart site. A good caching system can drastically reduce load times and lead to quicker navigation, which in turn, improves user satisfaction. Beyond that, an optimized website tends to rank better in search engine results, providing an added advantage in terms of online visibility and organic traffic. Therefore, a well-thought-out investment in caching is not just a technical upgrade but also a strategic move for the long-term success of your online store.